AirOps Product Review for Content Automation: My Honest Thoughts

Oussama Bettaieb

Marketing Director

Lately, I've been testing out a tool called AirOps, and I wanted to give an honest, behind-the-scenes breakdown of how it works, what I liked, what needs improvement, and whether it's worth adding to your stack.

This isn’t a sponsored review. It’s just me, screen recording and building real workflows, trying to see if this thing can actually save us time—or if it's just another flashy AI promise.

The Setup: Can AirOps Help Automate Content Creation?

My goal was pretty specific: I wanted to create a content workflow that would take a keyword and go all the way to a publishable first draft that we could hand off to a human writer.

Rough structure:

- Input a keyword

- Analyze the top 10 Google results

- Generate a meta title

- Create an outline based on our writing guidelines

- Write a first draft

- Evaluate it

- Write a second and third draft based on that evaluation

This isn’t just “write me an article.” It’s more like “write, critique, and iterate—then hand it off to a human.” Think of it as LLM-assisted ghostwriting with checkpoints.

How AirOps Structures Workflows

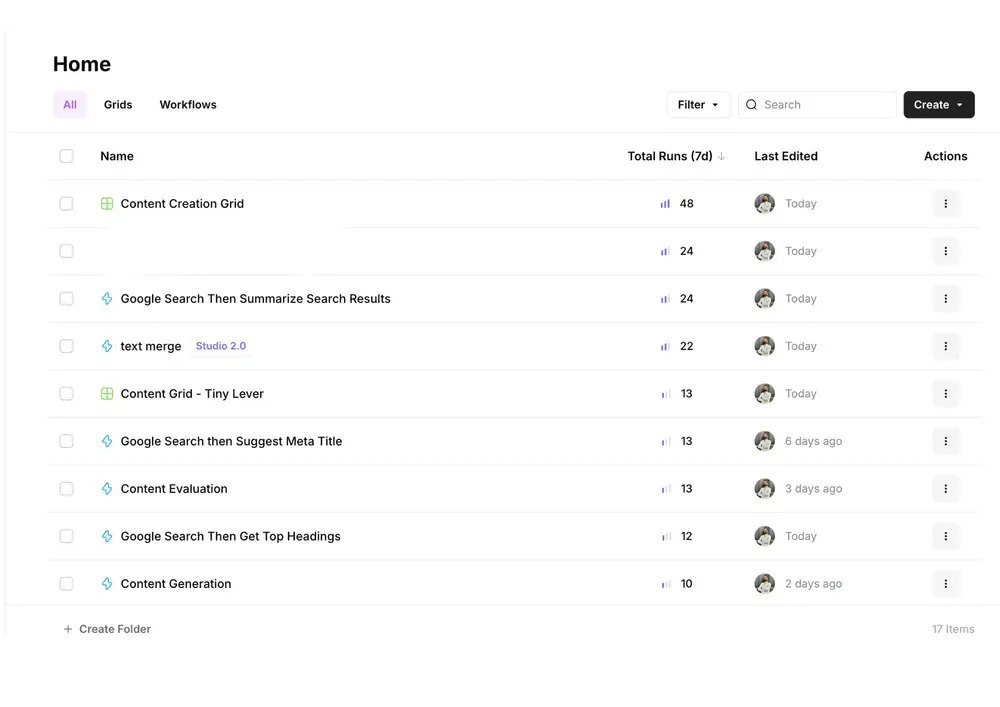

AirOps has two main modes:

- Individual workflows - a detailed process that will implement a defined task ( or a group of tasks)

- Grids – basically a dashboard to string together multiple workflows in a sequence.

I went with the grid model so I could control the full pipeline. You start with a blank canvas and stack steps as needed. I named mine Content Grid -tinylever, since I was testing this process on a mock article for tinylevermarketing.com.

Step One: Keyword Input + Search Summary

The first step was pulling in search results and summarizing them. I used AirOps’ prebuilt “Google Search & Summarize” workflow and customized it slightly to return more actionable insights, like:

“What type of content is ranking for this keyword? Blog posts? Listicles? Homepages?”

This step was pretty smooth. I liked that AirOps visually shows which step it's on and lets you trace the progress. The summary output was structured and useful, even if it didn’t fully hit my custom prompt the first time.

Step Two: Generating a Meta Title

Here’s where things got fun.

AirOps let me create a workflow that pulled in the Google search results, then gave me three AI-generated meta title options. It also let me insert a “human-in-the-loop” step to review and select the best one.

Big win here: I liked that I could edit the meta title directly inside the workflow. I added modifiers like “2025,” odd numbers (more clickable), and fixed grammar issues—all without leaving the tool.

Minor gripe: The prompt I gave didn’t fully enforce that the keyword be present in the title. That’s on me. But also—it’s a reminder that LLMs aren’t mind readers.

Step Three: Creating a Content Brief

This was more complex. I created a knowledge base using past human-written content, client info (like brand voice), and prompt instructions.

AirOps pulled this data in and used it to build a brief with:

- Primary + secondary keywords

- Suggested structure

- Section-level breakdown

- Word count guidance

It worked decently, but the structure sometimes felt too generic or missed key sections I expected based on SERP analysis (like “Top Agencies for Small Businesses”). So I added a few more columns and prompts to force better alignment with intent.

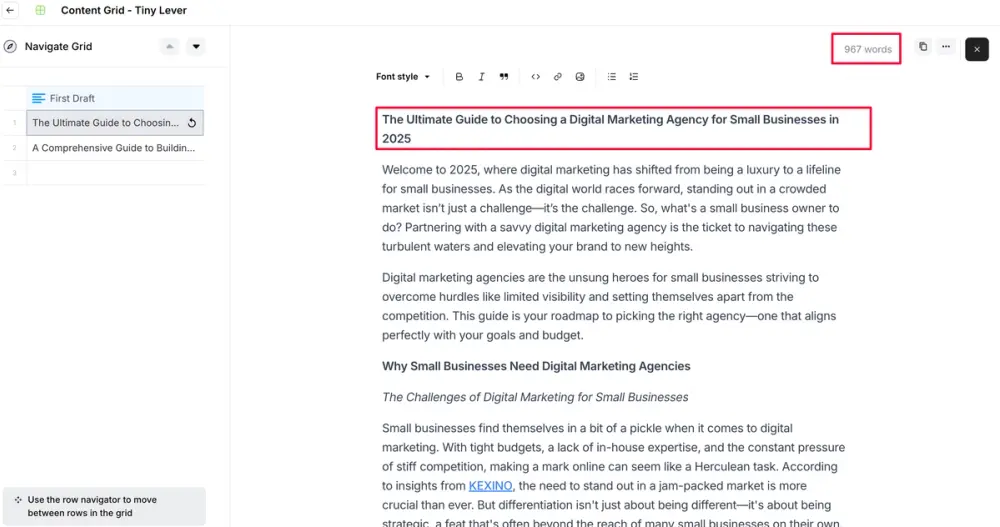

Step Four: Generating the First Draft

Now came the real test: could it write a decent draft?

Yes—kind of.

The first draft came in short (967 words vs. our 2,000-word target), and it drifted away from the keyword I wanted. It also restructured the title, which wasn’t ideal.

But I’ll give it this: the structure and tone were decent. And for a “first AI pass,” it gave us a lot we could work with.

I added a “word count correction” step that nudged the LLM to expand the article if needed. I also tried adding a humanizer step to reduce AI detection scores—but results there were mixed.

Step Five: Evaluation & Iteration

This is where I got nerdy.

I built a separate evaluation workflow that scores the draft on:

- Meta title/description inclusion

- Sentence and paragraph structure

- Keyword usage

- Internal/external links

- Formatting

- Tone

After that, I fed the evaluation into an improvement workflow, which re-wrote the article based on what the evaluator flagged.

I repeated this once more to get a “third draft”—our version of a final AI pass before human review.

Did the iterations get better? Somewhat.

But I’ll be honest—it’s unclear whether each round was significantly better than the last. And in some cases, the second or third draft even shortened the word count again. Still, the concept of structured feedback + improvement is solid.

Things I Liked About AirOps

✅ Transparent step-by-step execution – You can see where each workflow is in real time.

✅ Customizable – You can tweak every prompt, add human review steps, and chain workflows however you want.

✅ Knowledge base support – Letting the AI pull from your own content improves quality over time.

✅ Affordable – At $199/month for the Studio Starter plan, it’s not bad—especially if you build a process that saves your team hours.

✅ Preset templates – AirOps includes templates for scraping, summarizing, optimizing, and more. Great starting points.

Things That Need Work

❌ Prompt adherence is hit-or-miss – Sometimes you have to babysit the LLM a bit to get what you want.

❌ Some outputs lacked nuance – Even with a clear brief, the AI occasionally missed the point of what type of article to write.

❌ Limited auto-iteration – I wasn’t sure if feedback from one draft could be used to keep improving automatically without me triggering it manually.

❌ Content still needs human oversight – I wouldn’t trust the current setup to fully replace a human editor. It can get you to a strong draft, but not a finished product.

Final Thoughts

AirOps is a solid tool with a lot of potential.

It’s not going to eliminate your need for content writers, but it can absolutely accelerate the writing process—especially if you already have a tight workflow.

If you’re willing to experiment, adjust prompts, and bring a human editor into the loop, AirOps can be a valuable part of your content machine. And since it’s modular, you can apply the same logic to other parts of your marketing: internal linking, backlink outreach, image generation, and more.

The bottom line?

AirOps is worth trying—especially if you’re process-driven and don’t mind getting your hands dirty in setup. Just don’t expect perfection out of the box.